Digital Experience Analytics: The Ultimate Guide to Optimizing User Journeys

By Daniela Diaz • Updated 2025

TL;DR: In 2025, traffic is vanity. Experience is ROI. Digital Experience Analytics combines quantitative and behavioral data to reveal why users churn, hesitate, or convert. GA4 tells you what happened. DXA tells you why.

DXA blends heatmaps, session replay, funnels, and Voice of Customer into a single framework that surfaces hidden friction and accelerates growth.

On this page

What is Digital Experience Analytics (DXA)?

Digital Experience Analytics refers to the collection, visualization, and interpretation of user behavior across digital environments. Unlike traditional analytics focused on pageviews or traffic sources, DXA captures friction, frustration, and intent.

DXA vs. Traditional Web Analytics (GA4)

Web Analytics (GA4): User visited Checkout and bounced.

DXA: User attempted to click the payment toggle 3 times (Rage Click), encountered a validation error, and abandoned the cart.

The 3 Pillars: Behavior, Journey, and Voice of Customer

- Behavioral Data: Clicks, hover trails, scroll depth, rage clicks.

- Journey Data: Sequence of interactions, loops, dead ends.

- Voice of Customer: Direct feedback, NPS, in-app surveys.

Why Digital Experience Analytics Matters for Business Growth

Reducing Customer Churn

Friction kills retention. DXA exposes broken UI patterns, confusing navigation, or bugs users encounter while trying to complete tasks.

Increasing Customer Lifetime Value (CLV)

When users discover value quickly, they upgrade faster, stay longer, and require less support. Heatmaps and replays help teams surface value paths.

Validating Design Decisions with Data

Stop debating opinions. Test features, monitor replays, measure real usage.

Key Components of a DXA Strategy

1. Session Replays (Visualizing the “Why”)

Watch real user journeys. Identify hesitation, confusion, errors.

2. Interactive Heatmaps (Engagement)

Click, scroll, and mouse maps reveal attention and dead zones.

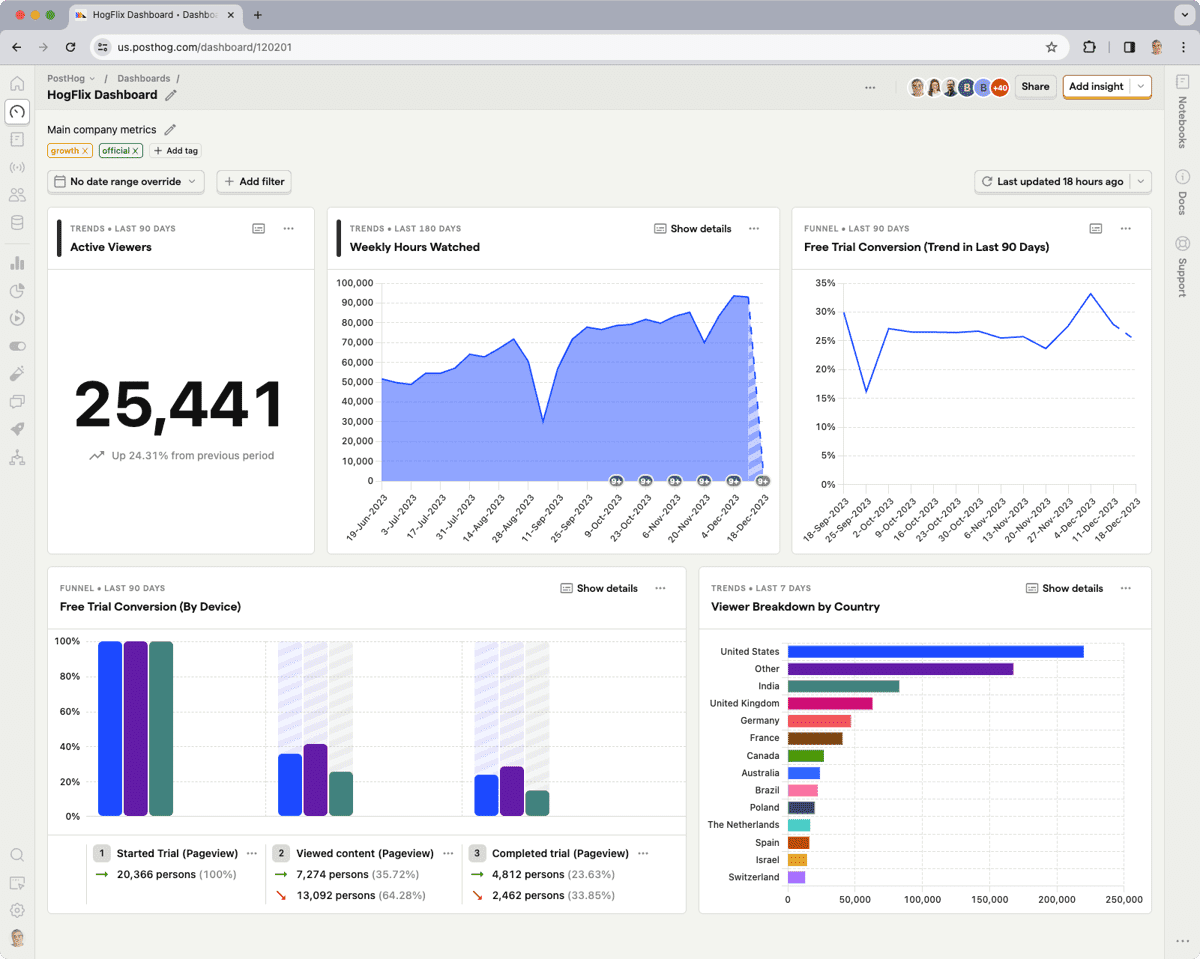

3. Funnel Analysis (Drop-offs)

Pinpoint the exact step users abandon tasks—checkout, signup, onboarding.

4. Voice of Customer (VoC)

Collect contextual feedback at the moment frustration occurs.

5. Error & Performance Tracking

Detect JavaScript failures, slow rendering, and blocking UI events.

How to Analyze User Behavior Using FullSession

Step 1: Map the Customer Journey

Segment users by acquisition, device, or intent to reveal patterns at scale.

Step 2: Segment Users by Behavior

Create targeted groups like “Added to cart but never checked out.”

Step 3: Identify Friction Points (Rage Clicks)

Sort recordings by frustration signals to triage UI issues rapidly.

Step 4: Optimize and A/B Test

Deliver improvements, monitor post-impact metrics, and iterate.

Essential DXA Metrics to Track

- Frustration Signals: Rage clicks, error clicks, dead taps.

- Time-to-Task Completion: Efficiency indicator for key journeys.

- Conversion Rate: Completion of desired actions.

- Retention Rate: Re-engagement after the first session.

Conclusion: Moving From Data to Insight

DXA is not a tool. It’s a culture shift. When teams visualize real behavior instead of dashboards, they build products users actually want to return to.

Understand users. Optimize their journey. Grow your business.

FAQs

What is the difference between Customer Experience (CX) and Digital Experience (DX)?

CX encompasses every interaction, including offline. DX focuses exclusively on digital touchpoints like apps, web, chatbots, and interfaces.

What does a Digital Experience Analyst do?

They analyze behavior data—heatmaps, funnels, and replays—to uncover insights and recommend growth strategies.

Is DXA GDPR compliant?

Yes. Tools like FullSession mask sensitive fields and enable enterprise-level privacy settings.

Can DXA tools replace Google Analytics?

No. They are complementary. GA measures traffic; DXA measures human behavior.